Deepfakes: Enemy of the Truth #01

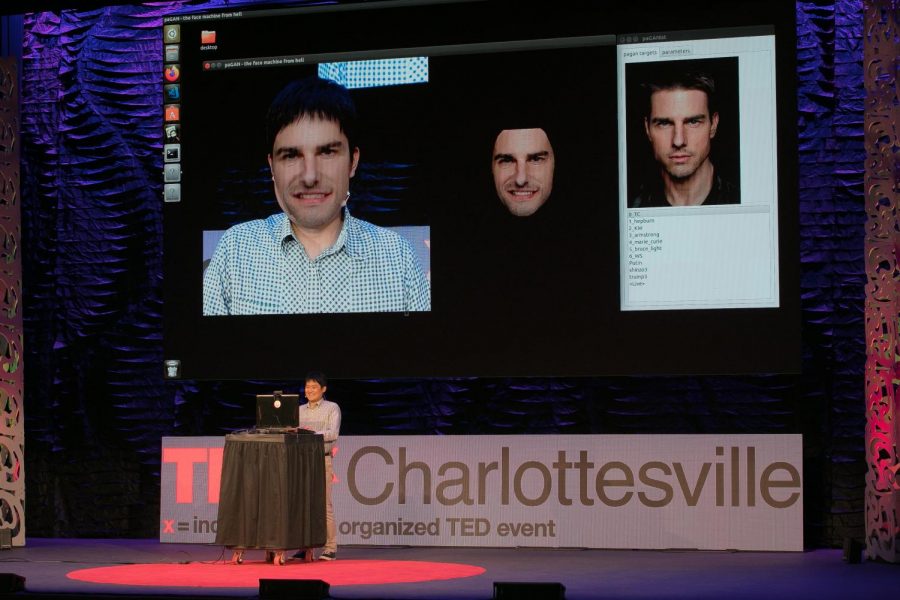

EDMOND JOE VIA FLICKR

As deep fake technology evolves, the veracity of video is called into question.

March 10, 2020

Imagine you saw yourself on TV saying or doing something that compromised everything you stood for. The reality was that it wasn’t you, but a deepfake. Who would believe you? First, what are deepfakes and why do we need to be so afraid of them? A deepfake is the realistic digital manipulation of sound, images, or video to impersonate someone that fools people into believing the virtual artifice is real.

They are created through the use of machine-learning algorithms mainly in the form of neural networks together with facial mapping software. It’s enough to send cold shivers up and down one’s spine. How far can deepfake infiltrate the trajectory of our lives?

Deepfakes that can be found in popular apps such as Tiktok, FaceSwap, Zao and many others are sources of laughs and seem fun, engaging and most of all: harmless.

Although they appear innocent, they pose a monumental threat to the very fabric of society: the ideal of trust in what we see and hear in media. As our ability to believe our own senses is brutally violated, it ultimately leads to a slowly decaying certainty of what we know to be the truth.

One of the potential horrors caused by this unnerving technology is deepfake porn. These videos realistically swap a person’s face on to another person engaging in a sex act. This issue has gained particular media attention as celebrities like Emma Watson fell victim to this cruel terrorism. The reputational harm and the feeling of shame instigated by those videos are beyond our imagination and have the potential to ruin a person’s life in a blink of an eye.

Furthermore, blackmailing will reach new heights of perfection, as it has never been easier to manipulate ‘evidence’ into something incredibly damaging, such as bogus racist comments or adultery. Conversely, as deepfake grows more popular, it could be used to invalidate actual true evidence by just claiming it is in fact a Deepfake.

The concerns circulating around “fake news” will raise to new dimensions. Polarization in elections could far exceed what we have encountered thus far. The deepfake as a weapon of propaganda can escalate fear and unleash chaos, perpetuating a loss of confidence in political leaders and institutions.

It could, for instance, falsely depict soldiers murdering innocent children, or government officials colluding with opposing spies revealing national secrets. Imagine if deepfakes portrayed any of these images, what would happen to civil society? It could ultimately question the very future of our democracy.

Examples of already released videos include a realistic Donald Trump explaining money laundering 101 and Mark Zuckerberg convincingly bragging that Facebook owns its users. Even skeptics have the potential to be fooled into believing the ‘truth’ of their eyesight and ears.

But the potentially fatal consequences of deepfakes do not stop at misleading the ordinary citizen. Governments might use the generated data for their own agenda. Recently the video-sharing app TikTok implemented a deepfake tool. U.S. senator Josh Hawley accuses TikTok of sending the detailed multiple-angle biometric scan of their 800 million (300 million non-Chinese) subscriber’s faces to the Chinese government to use for their face recognition, surveillance and tracking. Consequently, the U.S. military has banned the app’s use for its servicemen and women.

Malicious deepfake users might eventually be able to defraud banks via the use of facial payment. Currently, Alibaba claims it is impossible to break its Alipay ‘Smile to Pay’ service via this tool. Nevertheless, there are already at least three known cases, where deepfake audios have been used to steal money from companies.

What can be done to protect ourselves from this technology? At this point, the most effective tool we have is awareness. Disproving the videos is challenged by technological obstacles, but scientists all over the world are rushing to find countermeasures.

Currently, neural networks do not yet have a profound understanding of the human face thus generating at times unnatural phenomena such as inconsistent blinking and pixel inconsistencies. Researchers at UC Berkley developed an AI algorithm to detect these vicious videos via the tracking of head and gesture movements. However, the head movement detector might only be able protect world leaders and celebrities as the algorithm has to be trained with the respective individual, making it less effective for the general public. Even if we eventually achieve to efficiently detect and delete them – the internet does not forget.

Another angle to tackle the problem is through legal tools. Yet, defamation and criminal law will not go far enough. California, where deepfakes of politicians are deemed illegal within 60 days of elections, faces difficulties to enforce this law.

“… [T]he robust protections for noncommercial expressive conduct (for example, satire or parody) make it more difficult to impose restrictions on deepfakes,” information law professor Olivier Sylvain of Fordham Law points out. “Hence, to challenge deepfake videos might be easier accomplished through the use of copyright or right of publicity claims, which present their own challenges.”

The race has begun to fight this dangerous technology and its devastating consequences. Currently, creating realistic deepfakes requires expertise. However, there is no doubt that the technology will advance to a level where every 12-year-old could leverage the software on a whim. Fired by artificial intelligence, digital impersonation is on the upswing.

Ultimately, even the truth may be doomed as false.